Data scraping is an essential tool for researchers and companies in today's digital world, providing access to a vast amount of helpful information. It lets companies get insights, comprehend market trends, and make well-informed decisions by pulling data from various web sources.

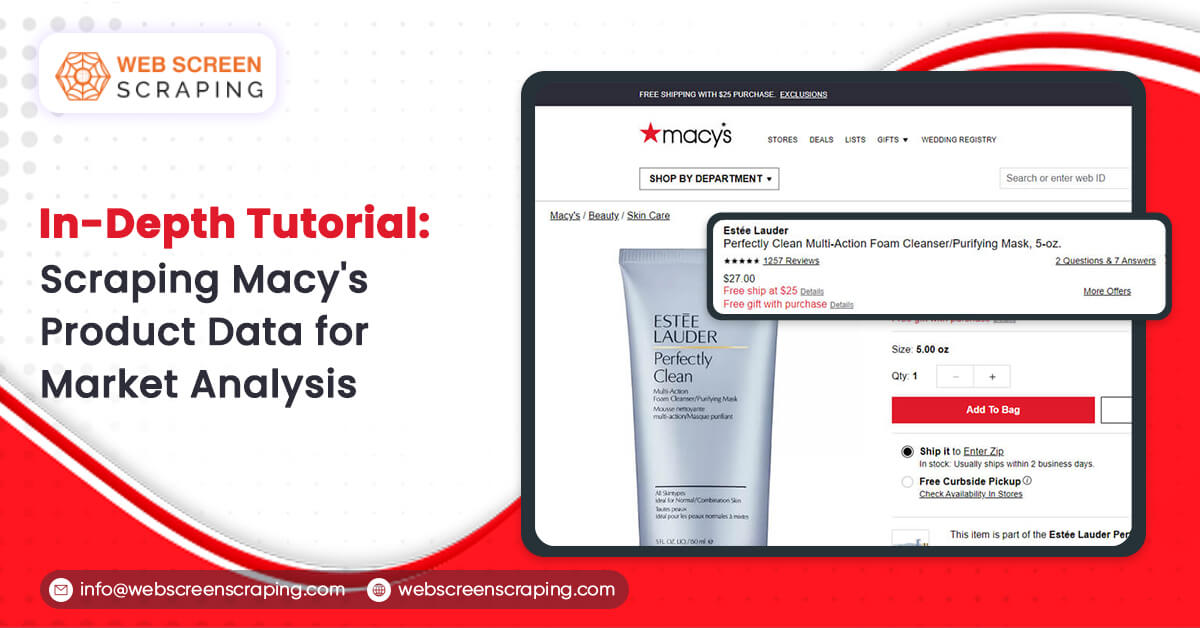

In the middle of this, Macy's rises to prominence as an online store with an extensive product selection that includes clothing, cosmetics, household necessities, and more. Being a well-known brand in the retail sector, Macy's is a veritable gold mine of knowledge on product trends, pricing tactics, and customer preferences.

In the United States, Macy's is a well-known department store. A vast range of products, including apparel, shoes, home furnishings, jewelry, cosmetics, and appliances, are sold at the department shops run by Macy's. Offering clients a wide variety of shopping experiences, the firm has shops all across the United States and an online store. Customers adore and trust Macy's for its wide selection of products, premium goods, and top-notch customer support.

What is Macy’s Product Data Scraping?

The technique of methodically gathering data from the Macy's website is known as scraping Macy's. Details like product names, descriptions, pricing, photos, availability, and other pertinent data points may be extracted as part of this process. People can visit Macy's online sites, collect certain data items, and aggregate them for various uses.

Scrape product data from Macy's, including pricing comparison, market analysis, trend detection, and other commercial and research needs. It can be done by utilizing specialized software or computer scripts. But it's imperative that scraping can be done by following Macy's terms of service and moral standards.

Tools Needed to Scrape Macy's Product Data

Python's abundant libraries and versatility make it the go-to programming language for web scraping. Of them, the following two libraries are crucial:

BeautifulSoup

It is a Python package for analyzing HTML and XML documents. It facilitates finding and extracting particular data items from the HTML structure.

Requests

To access the data on the Macy's website, this library allows submitting HTTP requests to fetch web pages.

Understanding the Structure of HTML

An essential component of online scraping is understanding the HTML layout of Macy's web pages. Users can examine webpage components with browser developer tools (like Firefox Developer Tools or Chrome DevTools). This investigation exposes the underlying HTML structure, displaying class names, IDs, and tags related to the relevant data

How to Perform Macy’s Product Data Scraping?

In keeping with the holiday spirit, we'll gather the following information by scraping Macy's product data from search results for "Christmas sweaters" in this tutorial:

Name of Product

Price; Description; Rating; Images

Product URL

We will also include it in our scraping procedure to get over any anti-bot defenses and have ScraperAPI manage our IP rotation.

So, let's get started!

Required Conditions

The following are the project's prerequisites:

- Python Environment – version 3.8 or above, preferably

- JSON

- Requests

- BeautifulSoup

- Lxml libraries

To install these libraries, you can use the following command:

Pip install requests beautifulsoup4 lxml

Once installed, use the following instructions to configure the structure of your project:

mkdir macys-scraper cd macys-scraper

Step 1: Set Up the Project

Make a new macy-scraper.py file and import the required libraries at the top to get started:

import requests from bs4 import BeautifulSoup import json

We need to utilize ScraperAPI to send a get() request to Macy's in order for them to give us the data; if we don't, they will find our scraper and block our IP address right away.

Macy’s Scraper helps to control IP rotation and ensures the continuous functioning of your scraping process—even for the most difficult-to-scrape e-commerce sites—avoids these challenges.

Using ScraperAPI is quite easy. All you need to do is send your API key and the URL of the website you want to scrape.

Now after you have your API key, let's add our first two variables:

API_KEY = "API_KEY" url = "https://www.macys.com/shop/featured/christmas%20sweaters"

Step 2: Make a Request to The URL

Using the Requests library, we'll create a payload to send along with ScraperAPI's default endpoint in order to make this request:

payload = {"api_key": API_KEY, "url": url}

r = requests.get("https://api.scraperapi.com", params=payload)

html_response = r.text

Our API key and the URL we wish to scrape are included in a dictionary that makes up the payload. We issue a GET request to ScraperAPI using the requests.get() method. The html_response variable contains the HTML content of the search result that was returned by the request.

Step 3: Parse the HTML

We can navigate the page's structure more easily by working with a simpler, hierarchical BeautifulSoup data structure instead of raw HTML when we parse the HTML using BeautifulSoup.

soup = BeautifulSoup(html_response, "lxml")

However, before navigating the parsed tree, we must understand how to target our desired elements.

Step 4: Understanding Macy's Site Structure

The Macy's website will provide results similar to the following when we search for our desired query:

As seen in the annotated screenshot below, all goods listed under "Christmas sweaters" may be retrieved, including their brand names, costs, descriptions, ratings, photos, and product URLs.

It's critical to comprehend a page's HTML layout. But you don't have to be an HTML guru because most common web browsers come with a function called Developer Tools.

To access Developer Tools, you can right-click on the webpage and select "Inspect" or use the shortcut "CTRL+SHIFT+I" for Windows users or "Option + ⌘ + I" on Mac. This will open the source code of the webpage that we are targeting.

Since every product is listed as an element, as can be seen above, we must gather every listing.

We require an identity linked to an HTML element in order to scrape it. Any class name, any HTML property, or the element's "id" might be this. In this instance, the identifier will be the class name.

We discover that every product container on the search results page is a div element with the class productThumbnail after looking throughout the page.

By utilising BeautifulSoup's find_all() function to locate every instance of a div with the class productThumbnail, we can obtain this HTML element.

product_containers = soup.find_all("div", class_="productThumbnail")

Each of these div components on the website stands in for a product container. We may use the same procedure to determine the class name for every element we wish to scrape.

Step 5: Extract the Product Data

We must go over each product container using a for loop and the select_one() and find() methods, which return the first matching element, in order to retrieve the required data:

product_data_list = []

for product_container in product_containers:

# Extract brand

brand_element = product_container.select_one(".productBrand")

brand_name = brand_element.text.strip() if brand_element else None

# Extract price

price_element = product_container.select_one(".prices .regular")

price = price_element.text.strip() if price_element else None

# Extract description

description_element = product_container.select_one(".productDescription .productDescLink")

description = description_element["title"].strip() if description_element else None

# Extract rating

rating_element = product_container.select_one(".stars")

rating = rating_element["aria-label"] if rating_element else None

# Extract image URL

image_element = product_container.find("img", class_="thumbnailImage")

image_url = image_element["src"] if image_element and "src" in image_element.attrs else None` "

After extracting the data, we create a dictionary and change it to a list named product_data.

product_data = {

"Product Brand Name": brand_name,

"Price": price,

"Description": description,

"Rating": rating,

"Image URL": image_url,

"Product URL": product_url,

}

# Append the product data to the list

product_data_list.append(product_data)

The text.strip() function extracts the element's text content and removes any leading or trailing whitespace.

Step 6: Write the Results to a JSON File

Lastly, we use the json.dump() function to write the product data list to a file in write mode after opening a file with the second argument set to 'w' in order to store the scraped data:

output_file = "Macy_product_results.json"

with open(output_file, "w", encoding="utf-8") as json_file:

json.dump(product_data_list, json_file, indent=2)

print(f"Scraped data has been saved to {output_file}")

Here's a preview of the output from the scraper, as seen in the Macy_product_results.json file:

{

"Product Brand Name": "Karen Scott",

"Price": "$49.50",

"Description": "Women's Holiday Sweater, Created for Macy's",

"Rating": "4.4235 out of 5 rating with 1072 reviews",

"Image URL": "https://slimages.macysassets.com/is/image/MCY/products/1/optimized/26374821_fpx.tif?$browse$&wid=224&fmt=jpeg",

"Product URL": "/shop/product/karen-scott-womens-holiday-sweater-created-for-macys?ID=14175633&isDlp=true"

},

{

"Product Brand Name": "Style & Co",

"Price": "$59.50",

"Description": "Women's Holiday Themed Whimsy Sweaters, Regular & Petite, Created for Macy's",

"Rating": "4.2391 out of 5 rating with 46 reviews",

"Image URL": "https://slimages.macysassets.com/is/image/MCY/products/0/optimized/24729680_fpx.tif?$browse$&wid=224&fmt=jpeg",

"Product URL": "/shop/product/style-co-womens-holiday-themed-whimsy-sweaters-regular-petite-created-for-macys?ID=16001406&isDlp=true"

},

{

"Product Brand Name": "Charter Club",

"Price": "$59.50",

"Description": "Holiday Lane Women's Festive Fair Isle Snowflake Sweater, Created for Macy's",

"Rating": "4.8571 out of 5 rating with 7 reviews",

"Image URL": "https://slimages.macysassets.com/is/image/MCY/products/2/optimized/23995392_fpx.tif?$browse$&wid=224&fmt=jpeg",

"Product URL": "/shop/product/holiday-lane-womens-festive-fair-isle-snowflake-sweater-created-for-macys?ID=15889755&isDlp=true"

},… More JSON Data,

Conclusion

Data scraping from the Macy's website provides a plethora of chances for academics, corporations, and individuals looking for insights into industry trends, client preferences, and pricing tactics. But you must handle this procedure morally, adhering to Macy's terms of service and using appropriate scraping techniques.

Furthermore, following Macy's ethical standards and anti-scraping regulations is crucial. Following these guidelines guarantees legal compliance and equitable access to internet resources. It helps establish credibility and confidence and promotes long-term sustainable practices.

Web Screen Scraping follows ethical scraping techniques that are advantageous to the scraper and the online community. It encourages cooperation and trust between data collectors and sites like Macy's.